Machine Learning 99+ Most Important MCQ (Multi choice question)

This Blog cover all possible Multi Choice Question from topic Introduction to Machine Learning, Concept Learning, Decision Tree. Total amount of question covers in This MCQ series is 100. They cover all the important aspect related to that topic provided below.

Right answer is provide in the quiz if you want mock test of [MCQ] series please write the comment below. There MCQ is very important in terms on AKTU exam, don’t forget to read part2 because it cover last year important question with answer.

If you want the Hard copy of MCQ then u can comment in the comment session. The Question is prepared after putting lot of effort so instead of copy it please share the Link so that we can able to take Benefit of our effort.

For more question click here

Machine Learning 99+ Most Important MCQ part1

Q1.What is Machine learning?

(A) The autonomous acquisition of knowledge through the use of computer programs

(B) The autonomous acquisition of knowledge through the use of manual programs

(C) The selective acquisition of knowledge through the use of computer programs

(D) The selective acquisition of knowledge through the use of manual programs

Sol. The autonomous acquisition of knowledge through the use of computer programs

Q2. Which of the factors affect the performance of learner system does not include?

(A) Representation scheme used

(B) Training scenario

(C) Type of feedback

(D) Good data structures

Sol. Good data structures

A machine learning problem represented or well define using three basic thing

1. Task to be perform

2. Performance parameter

3. Experience

Experience is very much effected by Type of Feed back and training scenario so Training scenario and Type of feed going to effect the performance of learning, as representation play a wider role as easy the representation its easy to represent or understand.

Q3. Which of the following statements is/are true about “Type-I ” and “Type-II” errors?

(i) Type l is known as false positive and Type2 is false negative.

(ii) Type 1 is known as false negative and Type2 is known as false Positive.

(iii) Type 1 error occurs when we reject a null hypothesis when it is true.

(A) Only i

(B) Only iii

(C) i and iii

(D) ii and iii

Sol. i and iii

In statistical hypothesis testing,

a type 1 error is the rejection of a true null hypothesis (also known as a finding conclusion or “false positive” ), while a type II error is the non-rejection of a false null hypothesis (also known as a finding or conclusion “false negative”).

Q4. How do you handle missing or corrupted data in a dataset?

(A) Drop missing rows or columns

(B) Replace missing values with mean/median/mode

(C) Assign a unique category to missing values

(D) All of the above

Sol. All of the above

Q5. Which is of the following option is true about FIND-S Algorithm

(A) FIND-S Algorithm starts from the most specific hypothesis and generalize it by considering only positive examples.

(B) FIND-S algorithm ignores negative examples.

(C) FIND-S algorithm finds the most specific hypothesis within H that is consistent with the positive training examples.

(D) All of the above

Sol. All of the above

FIND-S algorithm finds the most specific hypothesis within H that is consistent with the positive training examples.

FIND-S Algorithm starts from the most specific hypothesis and generalize it by considering only positive examples.

FIND-S algorithm ignores negative examples that’s why we come up with candidate elimination algorithm

Q 6. Regarding bias and variance, which of the following statements are true?(Here ‘high’ and ‘low’ are relative to the idea model.)

(A) Models which overfit have a high bias.

(B) Models which overfit have a low bias.

(C) Models which underfit have a high variance.

(D) None of these

Sol. Models which overfit have a low bias.

Explanation:

- Models which overfit have a low bias.

- Models which underfit have a low variance

Q7. Which of the following sentence is FALSE regarding regression?

(A) It relates inputs to outputs.

(B) It is used for prediction.

(C) It may be used for interpretation.

(D) It discovers causal relationships.

Sol. It discovers causal relationships.

Explanation:

Regression relates inputs to outputs, It is used for prediction and it may be used for interpretation.

Q8. You observe the following while fitting a linear regression to the data:

As you increase the amount of training data, the test error decreases, and the training error increases. The train error is quite low (almost what you expect it to), while the test error is much higher than the train error. What do you think is the main reason behind this behavior? Choose the most probable option

(A) High Variance

(B) High Model Bias

(C) High estimation bias

(D) None of the above

Sol. High Variance

Q9 Adding more basis functions in a linear model… (pick the most probably option)

(A) Decreases model bias

(B) Decreases estimation bias

(C) Decreases variance

(D) Doesn’t affect bias and variance

Sol. Decreases model bias

Q10 Which of the following will be true about k in k-NN in terms of Bias?

(A) When you increase the k the bias will be increases

(B) When you decrease the k the bias will be increases

(C) Can’t say

(D) None of these

Sol. When you decrease the k the bias will be increases

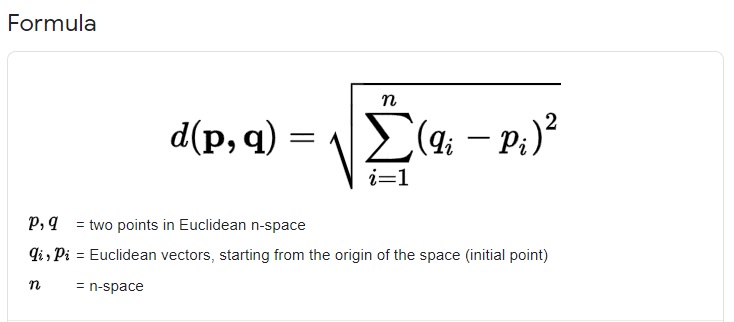

Q11 Which of the following distance measure do we use in case of categorical variables in k-NN?

1.Hamming Distance

2.Euclidean Distance

3.Manhattan Distance

(A) 1

(B) 2

(C) 3

(D) 1,2,and 3

Sol. Hamming Distance is use in case of categorical variable and Euclidean Distance and Manhattan Distance is use for continuous variable.

Q12. Imagine, you are working with “Analytics Vidhya” and you want to develop a machine learning algorithm which predicts the number of views on the articles. Your analysis is based on features like author name, number of articles written by the same author on Analytics Vidhya in past and a few other features. Which of the following evaluation metric would you choose in that case?

1. Mean Square Error

2. Accuracy

3. Fi Score

(A) Only 1

(B) Only 2

(C) Only 3

(D) 1 and 3

Sol. Mean square error

It can be considered that the article’s view count is a continuous target variable that belongs to the regression problem, so the mean square error will be used as an evaluation indicator.

Q13. At a certain university, 4% of men are over 6 feet tall and 1% of women are over 6 feet tall. The total student population is divided in the ratio 3:2 in favor of women. If a student is selected at random from among all those over six feet tall, what is the probability that the student is a woman?

(A) 25

(B) 36

(C) 3/11

(D) 1/100

Sol. 3/11

Let A={Student is Male}, B={Student is Female}.

Note that M and F partition the sample space of students.

Let T={Student is over 6 feet tall}.

We know that P(A)= 2/5 ,P(B)=3/5 , P(T/A)= 4/100 and P(T/B)= 1/100

We require P(F/T).

Using Bayes’ theorem we have:

P(F/T)= P(T/F)P(F)/ P(T/F)P(F)+P(T/M)P(M) =

(1/100* 3/5)/((1/100*3/5) + (4/100*2/5)) = 3/500 * 500/(3+8)=3/11

Q14. Macromutation operator is also known as

(A) Headed Chicken

(B) Headless chicken

(C) SPX operator

(D) BLX operator

Sol. Headless chicken

Q15 Choose the False Statement. Gradient of a continuous and differentiable function

(A) is zero at a minimum

(B) is non-zero at a maximum

(C) is zero at a saddle point

(D) decreases as you get closer to the minimum

Sol. (B) is non-zero at a maximum

Q16 Computational complexity of Gradient descent is,

(A) linear in D

(B) linear in N

(C) polynomial in D

(D) dependent on the number of iterations

Sol. Polynomial in D

Q17 Let’s say, you are using activation function X in hidden layers of neural network. At a neuron for any given input, you get the output as 0.0001″. Which of the following activation function could X represent?

(A) ReLU

(B) tanh

(C) SIGMOID

(D) None of these

Sol. tanh

Q18 Which of the following hyper parameter(s), when increased may cause random forest to over fit the data?

1. Number of Trees

2. Depth of Tree

3. Learning Rate

(A) Only I

(B) Only 2

(C) 1 and 2

(D) 2 and 3

Sol. Depth of Tree

Q19. Which of the following is a disadvantage of decision trees?

(A) Factor analysis

(B) Decision trees are robust to outliers

(C) Decision trees are prone to be overfit

(D) None of the above

Sol.(C) Decision trees are prone to be overfit

Q 20. To find the minimum or the maximum of a function, we set the gradient to zero because:

(A) The value of the gradient at extrema of a function is always zero

(B) Depends on the type of problem

(C) Both A and B

(D) None of the above

Sol.(A) The value of the gradient at extrema of a function is always zero

Q 21. In Delta Rule for error minimization

(A) Weights are adjusted w.r.to change in the output

(B) Weights are adjusted w.r.to difference between desired output and actual output

(C) weights are adjusted w.r.to difference between input and output

(D) none of the above

Sol. (B) Weights are adjusted w.r.to difference between desired output and actual output

Q 22. Back propagation is a learning technique that adjusts weights in the neural network by propagating weight changes.

(A) Forward from source to sink

(B) Backward from sink to source

(C) Forward from source to hidden nodes

(D) Backward from sink to hidden nodes

Sol. (B) Backward from sink to source

Q23 Which of the following neural networks uses supervised learning?

(A) Multilayer perceptron

(B) Self organizing feature map

(C) Hopfield network

Choose the correct answer:

(A) A only

(B) B only

(C) A and B only

(D) A and C only

Sol.(A) A only

Q24 Which of the following sentences is incorrect in reference to Information gain?

(A) It is biased towards single valued attributes

(B) It is biased towards multi valued attributes

(C) ID3 makes use of information gain

(D) The approach used by ID3 is greedy

Sol. (A) It is biased towards single valued attributes

Q25 What are two steps of tree pruning work?

(A) Pessimistic pruning and Optimistic pruning

(B) Post-pruning and Pre-pruning

(C) Cost complexity pruning and time complexity pruning

(D) None of the options

Sol.(B) Post-pruning and Pre-pruning

26. Which one of these is not a tree based learner?

(A) CART

(B) ID 3

(C) Bayesian classifier

(D) Random Forest

Sol.(C) Bayesian classifier

27. What is tree-based classifiers?

(A) Classifiers which form a tree with each attribute at one level

(B) Classifiers which perform series of condition checking with one attribute at a time

(C) Both a and b

(D) None of the options

Sol.(C) Both a and b

28. Decision Nodes are represented by

(A) Disks

(B) Squares

(C) Circles

(D) Triangles

Sol. (B) Squares

29. Previous probabilities in Bayes Theorem that are changed with help of new available information are

(A) independent probabilities

(B) posterior probabilities

(C) interior probabilities

(D) dependent probabilities

Sol.(B) posterior probabilities

30. Which of the following is true about Naive Bayes?

(A) Assumes that all the features in a dataset are equally important

(B) Assumes that all the features in a dataset are independent

(C) Both A and B

(D) None of the above options

Sol. (C) Both A and B

Q 31 The method in which the previously calculated probabilities are revised with new probabilities is classified as

(A) updating theorem

(B) revised theorem

(C) Bayes theorem

(D) dependency theorem

Sol (C) Bayes theorem

32. Which of the following is a widely used and effective machine learning algorithm based on the idea of bagging?

(A) Decision Tree

(B) Regression

(C) Classification

(D) Random Forest

Sol. (D) Random Forest

Q 33. Which of the following is a good test dataset characteristic?

(A) Large enough to yield meaningful results

(B) Is representative of the dataset as a whole

(C) Both A and B

(D) None of the above

Sol.(C) Both A and B

Q34. What is the arity in case of crossover operator in GA?

(A) Number of parents used for the operator

(B) Number of offspring used for the operator

(C) Both a and b

(D) None

Sol.(A) Number of parents used for the operator

Q 35. Which of the following statements about regularization is not connect?

(A) Using too large a value of lambda can cause your hypothesis to underfit the data.

(B) Using too large a value of lambda can cause your hypothesis to overfit the data.

(C) Using a very large value of lambda cannot hurt the performance of your hypothesis.

(D) None of the above

Sol. (C) Using a very large value of lambda cannot hurt the performance of your hypothesis.

Q 36. You are given reviews of movies marked as positive, negative, and neutral. Classifying reviews of new movie is an example of

(A) Supervised Learning

(B) Unsupervised Learning

(C) Reinforcement Learning

(D) None of these

Sol.(A) Supervised Learning because this is an example of classification

Q 37. Regarding bias and variance, which of the following statements are true?

(Here ‘high’ and ‘low’ are relative to the ideal model.)

(i) Models which overfit have a high bias.

(ii) Models which overfit have a low bias.

(iii) Models which underfit have a high variance.

(iv) Models which underfit have a low variance

(A) (i) and (ii)

(B) (ii) and (iv)

(C) (iii) and (iv)

(D) None of these

Sol. B (ii) and (iv)

Explanation:

- Models which overfit have a low bias.

- Models which underfit have a low variance

Q38. What is the purpose of restricting hypothesis space in machine learning?

(A) can be easier to search

(B) May avoid overfit since they are usually simpler (e.g. linear or low order decision surface)

(C) Both above

(D) None of the above

Sol.(C) Both above

Q39. Suppose, you got a situation where you find that your linear regression model is under fitting the data. In such situation which of the following options would you consider?

(A) You will add more features

(B) You will start introducing higher degree features

(C) You will remove Some features

(D) Both a and b.

Sol. (D) Both a and b.

40. Consider a simple linear regression model with one independent variable(X). The output variable is Y. The equation is : Y=a X+b, where a is the slope and b is the intercept. If we change the input variable (X) by 1 unit, by how much output variable (Y) will change?

(A) 1 unit

(B) By slope

(C) By intercept ,

(D) None

Sol. (B) By slope

Q 41. You have generated data from a 3- degree polynomial with some noise. What do you expect of the model that was trained on this data using a 5- degree polynomial as function class?

(A) Low bias, high variance

(B) High bias, low variance.

(C) Low bias, low variance.

(D) High bias, low variance.

Sol. (A) Low bias, high variance

Q 42. Genetic Algorithm are a part of

(A) Evolutionary Computing

(B) inspired by Darwin’s theory about evolution – “survival of the fittest”

(C) are adaptive heuristic search algorithm based on the evolutionary ideas of natural selection and genetics

(D) All of the above

Sol.(D) All of the above

Q 43. What are the 2 types of learning

(A) Improvised and un-improvised

(B) supervised and unsupervised

(C) Layered and unlayered

(D) None of the above

Sol.(B) supervised and unsupervised

Q44. Unsupervised learning is

(A) learning without computers

(B) problem based learning

(C) learning from environment

(D) learning from teachers

Sol.(C) learning from environment

Q45. In supervised learning

(A) classes are not predefined

(B) classes are predefined

(C) classes are not required

(D) classification is not done

Sol.(B) classes are predefined

Q 46. Mutating a strain is:

(A) Changing all the genes in the strain.

(B) Removing one gene in the strain.

(C) Randomly changing one gene in the strain.

(D) Removing the strain from the population.

Sol.(C) Randomly changing one gene in the strain.

Q47) Genetic Algorithms are considered pseudo-random because they:

(A) Search the solution space in a random fashion.

(B) Search the solution space using the previous generation as a starting point.

(C) Have no knowledge of what strains are contained in the next generation.

(D) Use random numbers.

Sol. (B) Search the solution space using the previous generation as a starting point.

Q48. The three gene operators we have discussed can be thought of as:

(A) Crossover: Receiving the best genes from both parents.

(B) Mutation: Changing one gene so that the child is almost like the parent.

(C) Mirror: Changing a string of genes in the child so it is like a cousinto the parent.

(D) A and B only

Sol. (D) A and B only

Q49. If a population contains only one strain, you can introduce new strains by:

(A) Using the Crossover operator.

(B) Injecting random strains into the population.

(C) Using the Mutation operator.

(D) B and C only

Sol. Injecting random strains into the population and Using the Mutation operator.

Q50. The efficiency of a Genetic Algorithm (how quickly it arrives at the best solution) is dependent upon:

(A) The initial conditions.

(B) The size of the population.

(C) The types of operators employed.

(D) All of the above

Sol. All of the above

Q51. Which of the following methods do we use, to find the best fit line for data in Linear Regression?

(A) Least Square Error

(B) Maximum Likelihood

(C) Logarithmic Loss

(D) Both A and B

Sol. Least Square Error

Q52. Among the following, which one is not “hyperparameter”?

(A) learning rate

(B) number of layers L in the neural network

(C) activation values a[l]

(D) size of the hidden layers n[l]

Sol.(A) learning rate

Q 53.

(i) The deeper layers of a neural network are typically computing more complex features of the input than the earlier layers.

(ii) The earlier layers of a neural network are typically computing more complex features of the input than the deeper layers.

Which of the following option is correct?

(A) (i) is correct and (ii) is incorrect

(B) (i) is incorrect while (ii) is correct

(C) both are correct

(D) both are incorrect

Sol.(A) (i) is correct and (ii) is incorrect

Q 54. There are certain functions with the following properties:

(i) To compute the function using a shallow network circuit, you will need a large network (where we measure size by the number of logic gates in the network)

(ii) To compute it using a deep network circuit, you need only an exponentially smaller network.

Which of the following option is correct?

(A) (i) is correct and incorrect

(B) (i) is incorrect while (ii) correct

(C) both are correct

(D) both are incorrect

Sol. both are correct

Q 55. Factor Analysis involves:

(A) dimensionality reduction technique

(B) finding correlation among variables

(C) capturing maximum variance in the data with minimum number of variables

(D) All the above

Sol.(D) All the above

Q56. Which of the following is way to reduce the skewness of a variable?

(A) Taking log of the skewed variable

(B) Dividing each value of skewed variable by standard deviation.

(C) Normalizing the skewed variable

(D) Standardizing the skewed variable.

Sol.(A) Taking log of the skewed variable

Q 57. what causes overfitting?

(A) Large number of features in the data

(B) Noise in the data

(C) both A and B

(D) None of the above

Sol.(C) both A and B

Q 58 Given an image of a person,

(i) predicting the height of that person

(ii) finding whether the person is in happy, angry or sad mood.

type of ML problem is

(A) (i) is classification while (ii) is regression problem

(B) (ii) is classification while (i) is regression problem

(C) both are classification problem

(D) both are regression problem

Sol.(B) (ii) is classification while (i) is regression problem

Q59. what does fitness function represent to describe optimization problem?

(A) Objective function

(B) Scaling function

(C) Chromosome decoding function

(D) All of the above

Sol. All of the above

Q 60 which of the following algorithms is called Lazy Learner?

(A) KNN

(B) SVM

(C) Naive Bayes

(D) Decision Tree

Sol. KNN

Q61 What are the main driving operators of GA

(A) Selection

(B) Crossover

(C) Both a and b

(D) None of these

Sol. Both a and b

Q62. which of the following is true about bagging and boosting?

(A) Both are ensemble learning techniques

(B) Both combine the output of weak learners to make consistent predictions

(C) Both can be used to solve classification as well as regression problems

(D) All of the above

Sol. (D) All of the above

Q 63. what causes underfitting?

(A) Less number of features in the data

(B) Less number of observations in the data

(C) Both a and b

(D) None of the above

Sol. Both a and b

Q 64. The performance of GA is influenced by

(A) Population size

(B) Crossover rate

(C) Mutation rate

(D) All of the above

Sol. (D) All of the above

Q65. which of the following are main components of evolutionary computation?

(A) Initial population

(B) Fitness function

(C) Crossover, mutation and selection

(D) All of the above

Sol. (D) All of the above

Q66. which of the following statement(s) is /are true

(A) Genetic algorithm mimic process from natural selection

(B) Chromosomes play vital roles in GA

(C) Both a and b

(D) Chromosomes can’t be encoded

Sol. Both A and B

Q 67. characteristics of individual is represented by

(A) Chromosomes

(B) Gray Code

(C) Initial population

(D) None of the above

Sol. (A) Chromosomes

Q68 what is the main concept Evolutionary computation?

(A) Survival of the fittest

(B) Survival of the weakest

(C) Phenotype

(D) None of these

Sol.(A) Survival of the fittest

Q 69 selective pressure is also known as

(A) Takeover Time

(B) candidate solution

(C) Proportionate time

(D) None of the above

Sol. (B) candidate solution

Q70. Which selection strategy is susceptible to a high selection pressure and low population diversity?

(A) Roulette-wheel selection

(B) Rank based selection

(C) Tournament selection

(D) All of the above

Sol.(A) Roulette-wheel selection

Q71 Choose the options that are correct regarding machine learning (ML) and artificial intelligence (AI),

(A) ML is an alternate way of programming intelligent machines.

(B) ML and AI have very different goals.

(C) ML is a set of techniques that turns a dataset into a software.

(D) AI is a software that can emulate the human mind.

Sol: (A), (C), (D)

Q72 K-fold cross-validation is

(A) linear in K

(B) quadratic in K

(C) cubic in K

(D) exponential in K

Sol. Linear in K

In k-fold cross-validation, we perform following steps

Step1: original sample data is randomly partitioned into k equal sized subsamples.

Step2: For k subsamples, a single subsample is retained as the validation data for testing the model, rest of remaining k − 1 subsamples are used as training data.

Step3: The cross-validation process is then repeated k times, with each of the k subsamples used exactly once as the validation data.

Step 4: The k results can then be averaged to produce a single estimation.

Q73 Suppose your model is overfitting. Which of the following is NOT a valid way to try and reduce the overfitting?

(A) Increase the amount of training data.

(B) Improve the optimization algorithm being used for error minimization.

(C) Decrease the model complexity.

(D) Reduce the noise in the training data.

Sol. Improve the optimization algorithm being used for error minimization.

Q74 Which of the following machine learning algorithm can be used for imputing missing values of both categorical and continuous variables?

(a) K-NN

(b) Linear Regression

(c) Logistic Regression

(d) None of the Above

Sol. (a) K-NN

Q75 Typically, value of k in k-nearest neighbors lie between-

(a) 0 to1

(b) 1 to 20

(c) 20 to 50

(d) -1 to 1

Sol. (b) 1 to 20

Q76 Which of the following statement is true about k-NN algorithm?

1. Performs of k-NN is much better in the case where all of the data have the same scale

2. k-NN struggles when the number of inputs is very large but perform well with a small number of input variables

3. k-NN makes no assumptions about the functional form of the problem

a) 1 and 2

b) 1 and 3

c) Only 1

d) All of the above

Sol. d) All of the above

Q77. One type of ANN system is based on a unit called

a) Perceptron

b) Cell

c) Pigment

d) Layer

Sol. a) Perceptron

Q78. The perceptron rule finds a successful weight vector when the training examples are linearly separable, it can fail to converge if the examples are not linearly separable?

a) True

b) False

c) Neither a) nor b)

d) All of the above

Sol. a) True

Q79. The key idea behind the delta rule is to use gradient descent to search the hypothesis space of possible weight vectors to find the weights that best fit the training examples.

a) True

b) False

c) Neither a) nor b)

d) All of the above

Sol. a) True

Q80. Which of the following are steps of the gradient descent algorithm for training linear units

a) Pick an initial random weight vector

b) Apply the linear unit to all training examples, then compute Δwi for each weight

c) Update each weight wi by adding Δwi, then repeat this process

d) All of the above

Sol. d) All of the above

Q81 Gradient descent is a strategy for searching through a large or infinite hypothesis space that can be applied whenever

S1 The hypothesis space contains continuously parameterized hypotheses

S2 The hypothesis space contains uncontinuously parameterized hypotheses

S3 The hypothesis space doesn’t contains parameterized hypotheses

S4 The error can be differentiated with respect to these hypothesis parameters

S5 The error can be differentiated with respect to these hypothesis parameters

a) S1 and S2

b) S1 and S4

c) S3 and S4

d) S2 and S5

Sol. b) S1 and S4

Q82 What is key practical difficulties in applying gradient descent

S1. Converging to a local minimum can sometimes be quite slow

S2. Converging to a local minimum can sometimes not possible

S3. If there are multiple local minima in the error surface, then there is no guarantee that the procedure will find the global minimum.

S4. there no practical difficulties in applying gradient descent

a) S1 and S2

b) S1 and S4

c) S1 and S3

d) S2 and S5

Sol. c) S1 and S3

Q 83. The idea behind stochastic gradient descent is to approximate this gradient descent search by updating weights incrementally, following the calculation of the error for each individual example

a) True

b) False

c) Neither a) nor b)

d) All of the above

Sol. a) True

Q84. which of the following true with respect to differences between standard gradient descent and stochastic gradient descent

a) In standard gradient descent, the error is summed over all examples before updating weights, whereas in stochastic gradient descent weights are updated upon examining each training example.

b) standard gradient descent is often used with a larger step size per weight update than stochastic gradient descent.

c) In cases where there are multiple local minima with respect to stochastic gradient descent can sometimes avoid falling into these local minima

d) All of the above

Sol. d) All of the above

Q85 Multilayer networks learned by the BACKPROPAGATION algorithm are capable of expressing a rich variety of nonlinear decision surfaces.

a) True

b) False

c) Neither a) nor b)

d) All of the above

Sol. a) True

Q86. The BACKPROPAGATION multilayer networks is guaranteed to converge toward some global minimum in E and not necessarily to the local minimum error.

a) True

b) False

c) Neither a) nor b)

d) All of the above

Sol. b) False

Q87. Which of the following will be Euclidean Distance between the two data point A(1,3) and B(2,3)?

(a) 1

(b) 2

(c) 4

(d) 8

Sol. (a) 1

Q89 If k=1, then which of the following statement is true?

(a) Powerful for large number of records in training partition

(b) Weak for large number of records in training partition

(c) Both a) and b)

(d) None of the above

Sol. (a) Powerful for large number of records in training partition

Q90 Which of the following is an advantage of choosing k>1?

(a) Maximizes misclassification rate

(b) Provides smoothing that reduces the risk of overfitting

(c) Minimizes classification rate

(d) None of the above

Sol. (b) Provides smoothing that reduces the risk of overfitting

Q91

Statement I: The central issue in k-NN is how to measure the distance between records

based on their predictor values.

Statement II: The k-NN classifier can easily be applied to an outcome with m classes, where m>2.

Which of the following is true?

(a) Statement I is true and Statement II is false

(b) Statement II is true and Statement I is false

(c) Both the statements are true

(d) None of the statement are true

Sol. (c) Both the statements are true

Q92 Which of the following statement is true about Baysian reasoning

S1. It is a probabilistic approach to inference.

S2. It is assumed that the quantities of interest are governed by probability distributions

a) S1 is correct

b) S2 is correct

c) S1 and S2 is correct

d) Both are false

Sol. c) S1 and S2 is correct

Q93. Why Bayesian learning methods are relevant to study of machine learning

R1: In this Calculating explicit probabilities for hypotheses are among the most practical approaches

R2: They provide a useful perspective for understanding many learning algorithms

Which of the reason are true

a) R1 is correct

b) R2 is correct

c) R1 and R2 is correct

d) Both are false

Sol. c) R1 and R2 is correct

Q94. Which of the following are Features of Bayesian Learning Methods

a) This provides a more flexible approach to learning than algorithms that completely eliminate a hypothesis if it is found to be inconsistent with any single example.

b) It accommodate hypotheses that make probabilistic predictions.

c) New instances can be classified by combining the predictions of multiple hypotheses.

d) All of the above

Sol. d) All of the above

Q95. The initial probability that hypothesis h holds, before we have observed the training data

a) Prior probability of hypothesis h

b) Prior probability of Data

c) Posterior probability of h

d) None of the above

Sol. a) Prior probability of hypothesis h

Q96. The probability P (h/D) that h holds given the observed training data D

a) Prior probability of hypothesis h

b) Prior probability of Data

c) Posterior probability of h

d) None of the above

Sol. c) Posterior probability of h

Q97. Which of the following is correct formula to calculate Posterior probability of h

a) P(h | D) = P(D | h) * P (h) / P(D)

b) P(h | D) = P(h | D) * P (D) / P(D)

c) P(h | h) = P(D | h) * P (h) / P(D)

d) P(D | h) = P(D | h) * P (h) / P(D)

Sol. a) P(h | D) = P(D | h) * P (h) / P(D)

You can get download link of the Machine Learning MCQ Pdf completely free by sharing and commenting yours email id in the comment below.

Related Post

Machine Learning 99+ Most Important MCQ

Machine Learning Most Important MCQ part2

Candidate Elimination Algorithm with Example

thank for reading this post on Machine Learning 99+ Most Important MCQ if you want more MCQ please comment and don’t forget to visit over previous post link attached at two place in this post with name Machine Learning 99+ Most Important MCQ.

#Top Machine Learning MCQ #Machine Learning MCQ Questions and Answers #Machine Learning (ML) Solved MCQs #Machine Learning MCQs and Answers With Explanation #Machine Learning – Quiz(MCQ) #Machine Learning MCQ Questions and Answer PDF Download #Machine Learning MCQ Quiz & Online Test 2023