Machine Learning

UNIT 2

Important Questions

- Give

decision trees to represent the following Boolean functions

·

A ˄˜B

·

A V [B ˄ C]

·

A XOR B

·

[A ˄ B] v [C ˄ D]

- Consider the following set

of training examples:

|

Instance |

Classification |

a1 |

a2 |

|

1 |

+ |

T |

T |

|

2 |

+ |

T |

T |

|

3 |

– |

T |

F |

|

4 |

+ |

F |

F |

|

5 |

– |

F |

T |

|

6 |

– |

F |

T |

(a) What is the entropy of this

collection of training examples with respect to the target function

classification?

(b) What is the information gain of a2 relative to these training examples?

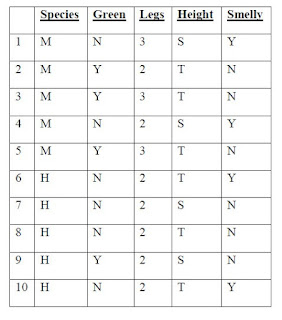

- NASA

wants to be able to discriminate between Martians (M) and Humans (H) based

on the following characteristics: Green ∈ {N, Y}, Legs ∈

{2,3}, Height ∈ {S, T}, Smelly ∈ {N, Y}

Our available

training data is as follows:

a) Greedily learn a decision tree using the ID3

algorithm and draw the tree .

b) (i) Write the learned concept for Martian as a

set of conjunctive rules (e.g., if (green=Y and legs=2 and height=T and

smelly=N), then Martian; else if … then Martian; else Human).

(ii) The solution of part b) i) above uses up to 4

attributes in each conjunction. Find a set of conjunctive rules using only 2

attributes per conjunction that still results in zero error in the training

set. Can this simpler hypothesis be represented by a decision tree of depth 2?

Justify.

4.Discuss Entropy in ID3 algorithm with an example

5.Compare Entropy and Information Gain in ID3 with

an example.

6. Describe hypothesis Space search in ID3 and

contrast it with Candidate-Elimination algorithm.

7. Relate Inductive bias with respect to Decision

tree learning

8. Illustrate Occam’s razor and relate the

importance of Occam’s razor with respect to ID3 algorithm.

9. List the issues in Decision Tree Learning.

Interpret the algorithm with respect to Overfitting the data.

10. Discuss the effect of reduced Error pruning in

decision tree algorithm.

11. What type of problems are best suited for

decision tree learning

12. Write the steps of ID3Algorithm

13. What are the capabilities and limitations of

ID3

14. Define (a) Preference Bias (b) Restriction Bias

15. Explain the various issues in Decision tree

Learning

16. Describe Reduced Error Pruning

17. What are the alternative measures for selecting

attributes

18. What is Rule Post Pruning