Candidate Elimination Algorithm

Introduction to Candidate Elimination Algorithm

Candidate elimination algorithm overcomes the problem that is imposed by the Find-S algorithm. Find-S algorithm only considers positive training examples while Candidate elimination algorithm considers positive and negative both training

examples.

The candidate-Elimination algorithm computes the version space containing all (and only those) hypotheses from H that are consistent with an observed sequence of training examples.

Candidate elimination algorithm performs Bi-directional search in the hypothesis space.

Incase of positive training examples, it moves from top to bottom (Specific hypothesis to General Hypothesis) and in the case of negative training examples it moves from bottom to top (general hypothesis to specific hypothesis).

Some important terms used in Candidate Elimination Algorithm

1) Version space: – The version space which is denoted by VS [H,D] with respect to hypothesis space H and training examples D, is the subset of hypothesis from H consistent with training examples in D. V[H,D] = {h Є H | consistent (h , D)}

In simple words, version space is just an intermediate state of most specific boundary and most general boundary. Most

specific boundary is denoted by S0 ={‘φ’,’φ’,’φ’} and most general boundary is denoted by G0 = {‘?’,’?’,’?’} where

number of ‘φ’ and ‘?’ is equal to the number of attributes in training set.

Candidate Elimination Algorithm

- Initialize G to the set of maximally general hypothesis in H

- Initialize H to the set of maximally specific hypothesis in H.

- For each training example d, do

- If d is a positive example

- Remove from G any hypothesis that is inconsistent with d

- For each hypothesis s in S that is not consistent with d

- Remove s from S.

- Add to S all minimal generalizations h of s such that

- h consistent with d, some member of G is more general than h

- Remove from S any hypothesis that is more general than another hypothesis in S

- If d is a negative training example

- Remove from S any hypothesis that is inconsistent with d

- For each hypothesis g in G that is not consistent with d

- Remove g from G.

- Add to G all minimal specializations h of g such that

- h consistent with d, some member of S is more specific than h

- Remove from G any hypothesis that is less general than another hypothesis in G

Example: – we understand this algorithm with the help of below training example:

| Sky | Air Temperature | Humidity | Wind | Water | Forecast | Enjoy Swimming |

| Sunny | Warm | Normal | Strong | Warm | Same | Yes |

| Sunny | Warm | High | Strong | Warm | Same | Yes |

| Rainy | Cold | High | Strong | Warm | Change | No |

| Sunny | Warm | High | Strong | Cool | Change | Yes |

- Initialize most general hypothesis in H

G0 = <? , ? , ? , ? , ? , ?>

- Initialize most specific hypothesis in H

S0 =<φ , φ , φ , φ , φ , φ>

- Consider first positive training example:

X1=<sunny , warm , normal , strong, warm, same> +

In this candidate elimination algorithm checks S boundary and find that it is overly specific and it fails to cover the positive example. Therefore S boundary must be generalized to fit this positive training example.

S0 < φ , φ , φ , φ , φ , φ >

S1 <sunny, warm, normal, strong, warm, same>

G0, G1 < ? , ? , ? , ? , ? , ? >

4. Now we consider second positive training example:

X2 = <sunny, warm, high, strong, warm, same> +

In this case we again make changes to S and leaving G again unchanged. as S1 is not classifying the data correctly there need of some changes

S0 < φ , φ , φ , φ , φ , φ >

S1 <sunny, warm, normal, strong, warm, same>

S2 <sunny, warm, ?, strong, warm, same>

G0, G1 < ? , ? , ? , ? , ? , ? >

5. Now we consider third negative training example :

X3= <Rainy, cold, high, strong, warm, change> –

In this case, candidate elimination algorithm checks G boundary and find that it is overly generalized and it fails to cover the negative example. Therefore G boundary must be specified to fit this negative training example.

S0 < φ , φ , φ , φ , φ , φ >

S1 <sunny, warm, normal, strong, warm, same>

S2 S3 <sunny, warm, ?, strong, warm, same>

G3 <sunny, ?, ?, ?, ?, ?> <?, warm, ?, ?, ?, ?> <?, ?, ?, ?, ?, same>

G0, G1 < ? , ? , ? , ? , ? , ? >

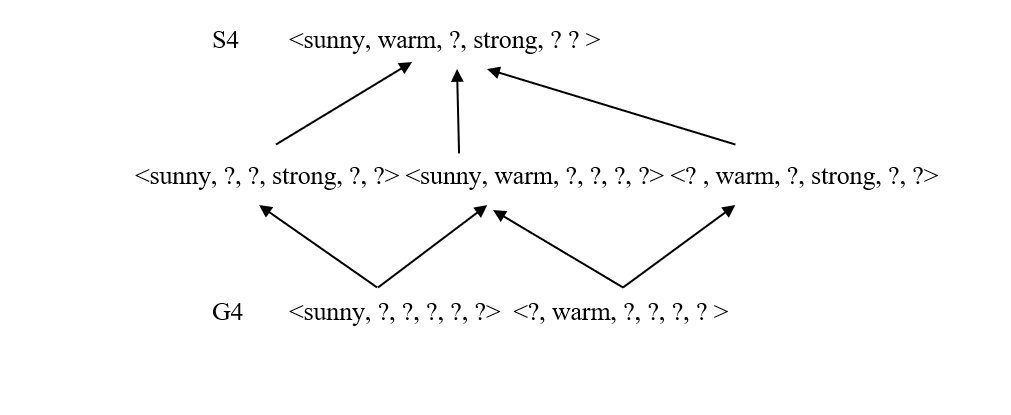

6. Now we consider fourth positive training example :

X4= < sunny, warm, high, strong, cool, change > +

This positive training example further generalizes the S boundary of the version space.

S0 < φ , φ , φ , φ , φ , φ >

S1 <sunny, warm, normal, strong, warm, same>

S2 S3 <sunny, warm, ?, strong, warm, same>

S4 <sunny, warm, ?, strong, ?, ? >

G3 <sunny, ?, ?, ?, ?, ?> <?, warm, ?, ?, ?, ?>

G0, G1 < ? , ? , ? , ? , ? , ? >

Here one member of G boundary will also removed because this member fails to cover this new positive training example.

Thus the version space which we will obtained over here is :

The Candidate Elimination Algorithm and the Find-S algorithm are both used for concept learning in machine learning, specifically for classification tasks. Here are some advantages and disadvantages of the Candidate Elimination Algorithm in comparison with Find-S:

Advantages of Candidate Elimination Algorithm over Find-S:

- Expressiveness: The Candidate Elimination Algorithm is more expressive than Find-S. It maintains a version space that includes multiple hypotheses rather than a single most specific hypothesis. This allows for a more flexible representation of the current understanding of the concept.

- Generalization: The algorithm has the ability to generalize from both positive and negative examples. It can handle both types of instances during the learning process, contributing to a more comprehensive and robust learning model.

- Flexibility: Candidate Elimination Algorithm can handle more complex classification tasks, such as those with multiple classes or non-linear decision boundaries.

- Handling Noise: The version space maintained by the Candidate Elimination Algorithm allows it to handle noisy data better than Find-S. Instead of sticking to a single hypothesis, it considers multiple hypotheses, which can help mitigate the impact of outliers and noisy examples.

- Better handling of continuous attributes: Candidate Elimination Algorithm can handle continuous attributes by creating boundaries for each attribute, which makes it more suitable for a wider range of datasets.

- Incremental Learning: Candidate Elimination supports incremental learning. As new examples are encountered, the algorithm can update its version space to accommodate new information, making it suitable for online learning scenarios.

Disadvantages of Candidate Elimination Algorithm in comparison with Find-S:

- Computational Complexity: The Candidate Elimination Algorithm maintaining and updating a version space with multiple hypotheses which may require more computational resources compared to the simpler Find-S algorithm.

- Higher memory requirements: Candidate Elimination Algorithm requires more memory to store the set of hypotheses and boundaries, which may make it less suitable for memory-constrained environments.

- Sensitivity to Redundant Data: The algorithm may be sensitive to redundant or conflicting data. If there are inconsistencies in the training data, it can lead to a large version space or incorrect generalizations.

- Overfitting: Due to the flexibility of maintaining multiple hypotheses, there is a risk of overfitting to the training data. The version space might become too large and include hypotheses that do not generalize well to unseen data.

Related Post

Machine Learning 99+ Most Important MCQ

Machine Learning Most Important MCQ part2